Ever wanted to visualize how your Resque queues, workers, and jobs are doing? In this article, we’re going to learn how to build some simple graphs around the Resque components.

Delegating jobs to a background queue

The delegation of long-running, computationally expensive, and high-latency jobs to a background worker queue is a common pattern used to create scalable web applications. The goal is to serve end-user requests with the fastest possible response by ensuring that all expensive jobs are handled outside the request/response cycle.

Resque

Resque is a Redis-backed Ruby library for creating background jobs, placing them on multiple queues, and processing them later. It’s designed for use in scenarios that require a high volume of job entries as Resque provides mechanisms to ensure visibility and reliability of behavior while relaying statistics through a web dashboard.

Redis

Redis is an open source (BSD licensed), in-memory data structure store, used as a database, cache, and message broker. It supports data structures such as strings, hashes, lists, sets, sorted sets with range queries, bitmaps, hyperloglogs, and geospatial indexes with radius queries.

Node.js

Node.js is a platform built on Chrome’s JavaScript runtime for easily building fast and scalable network applications. Node.js uses an event-driven, non-blocking I/O model that makes it lightweight and efficient, and thus perfect for data-intensive real-time applications that run across distributed devices.

Express.js

Express.js is a Node.js framework. Node.js is a platform that allows JavaScript to be used outside the web browsers, for creating web and network applications. This means that you can create the server and server-side code for an application like most of the other web languages, but using JavaScript.

Socket.IO

Socket.IO is a JavaScript library for real-time web applications. It enables real-time, bi-directional communication between web clients and servers. It has two parts: a client-side library that runs on the browser, and a server-side library for Node.js. Both components have nearly identical APIs.

Heroku

Heroku is a cloud platform that lets companies build, deliver, monitor, and scale apps — it is the fastest way to go from idea to URL, bypassing all those infrastructure headaches.

This article assumes that you already have Redis, Node.js, and the Heroku Toolbelt installed on your machine.

Setup:

- Download the code from ScaleGrid’s repository.

- Run npm install to install the necessary components.

- Finally, you can start the node server by doing “node index.js”. You can also run “nodemon” which watches for file changes as well.

Our application uses a port of Resque called node-resque that allows us to watch queues, workers, and jobs running on a Redis cluster.

Understanding the basics

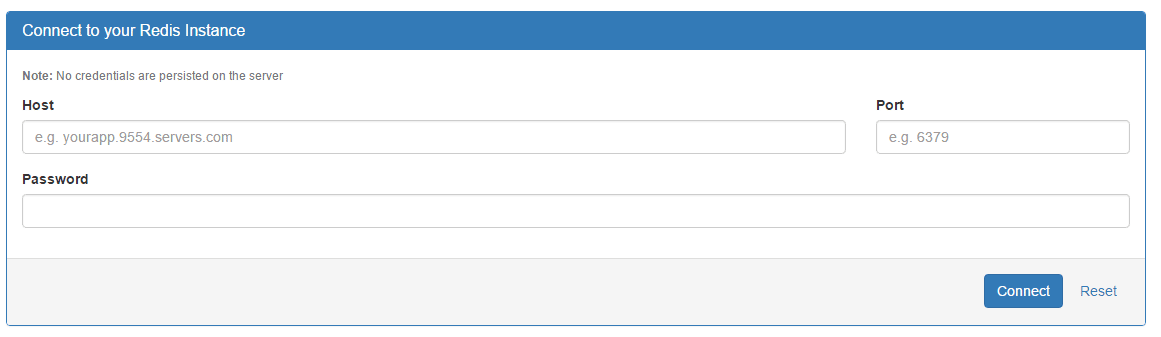

As soon as you start the app, you are required to enter your Redis cluster credentials. Please note that you must have Resque installed and running on your cluster for this to work correctly.

Fortunately, fully managed ScaleGrid for Redis™* provides a high performance, one-click hosting solution for Redis™. If you’re not already a member, you can sign up for a free 30-day trial here to get started.

Otherwise, log in to your dashboard and create a new Redis™ cluster under the Redis™ section. Once your cluster is up and running, you can pick up the necessary details from the Cluster Details Page. You will need the following info:

- Host

- Port

- Password

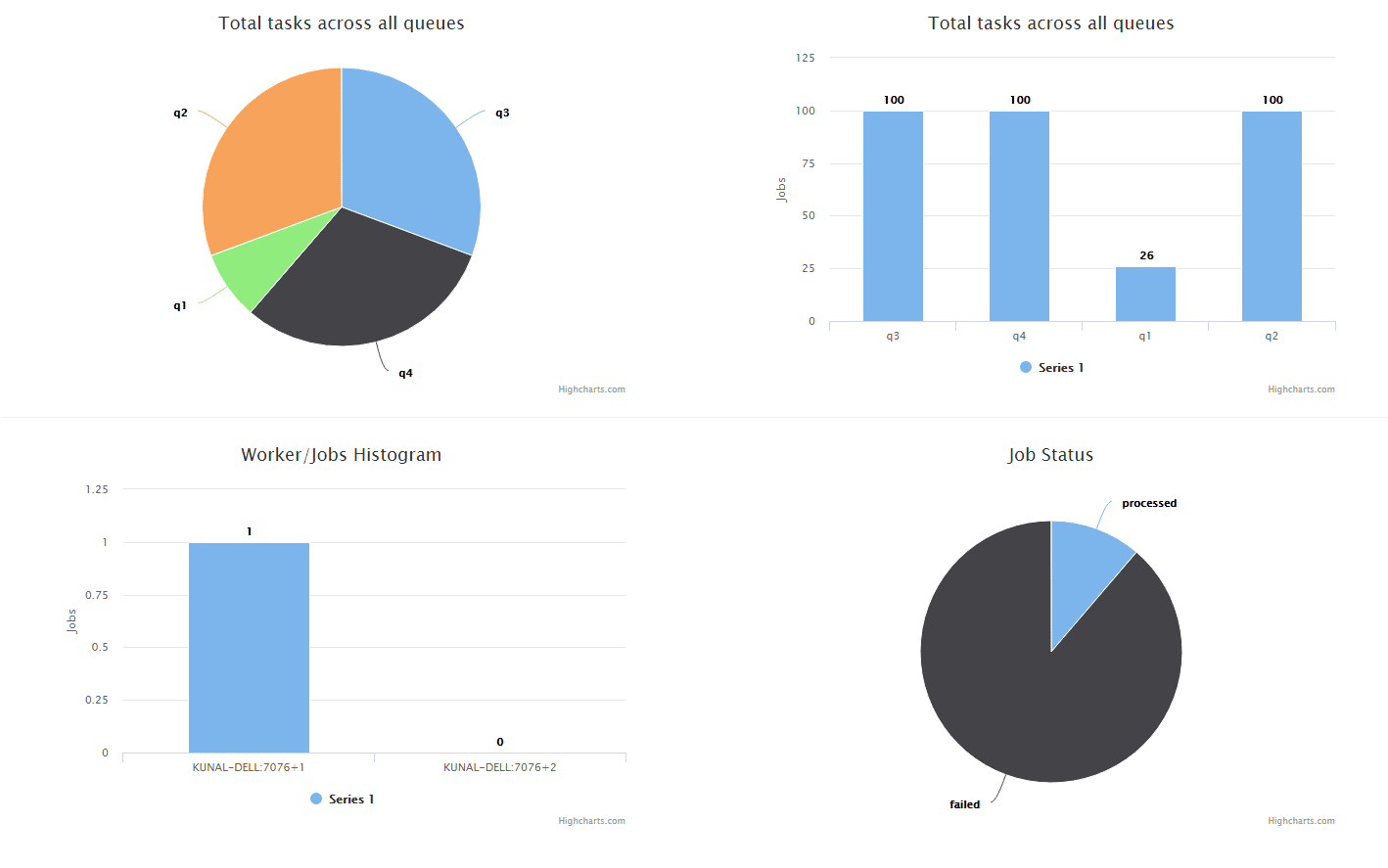

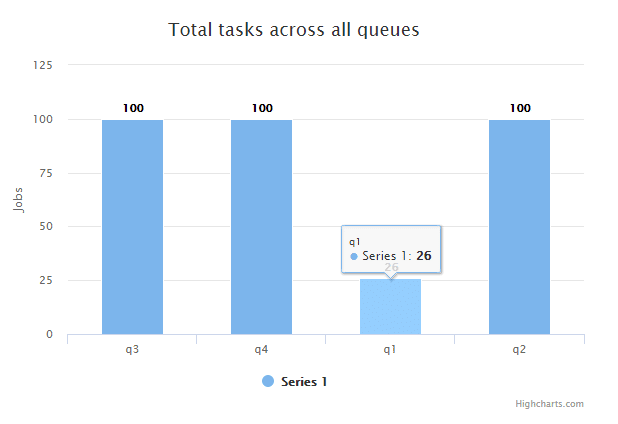

If the connection is successful, you should see graphs like the following:

Let’s discuss each of these graphs in detail. Please note that all the graphs are self-updating, so if workers are processing jobs on your cluster, the graphs will update themselves automatically.

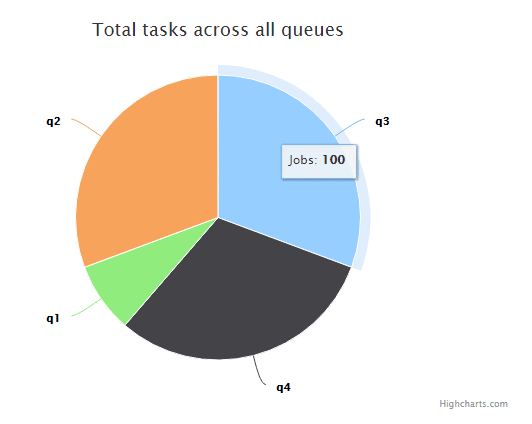

Total tasks across all queues

The above graphs show the total number of Resque queues on your cluster, and the number of jobs contained in each queue. Resque stores a job queue in a Redis list named “resque:queue:name”, and each element in the list is a hash serialized as a JSON string. Redis also has its own management structures, including a “failed” job list. Resque namespaces its data within Redis with the “resque:” prefix, so it can be shared with other users.

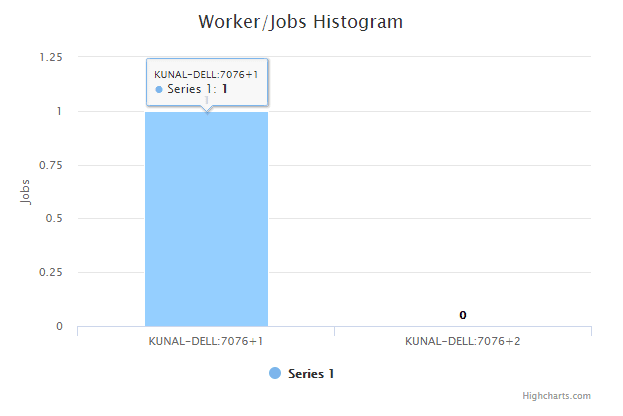

Worker/jobs histogram

A Resque worker processes jobs. On platforms that support fork(2), the worker will fork off a child to process each job. This ensures a clean slate when beginning the next job and cuts down on gradual memory growth as well as low-level failures.

It also ensures that workers are always listening to signals from you, their master, and can react accordingly.

The above graph shows all the workers on the Redis cluster. A state of 1 indicates that a job has been assigned to the worker and is in progress, and a state of 0 indicates that the worker is free/idle.

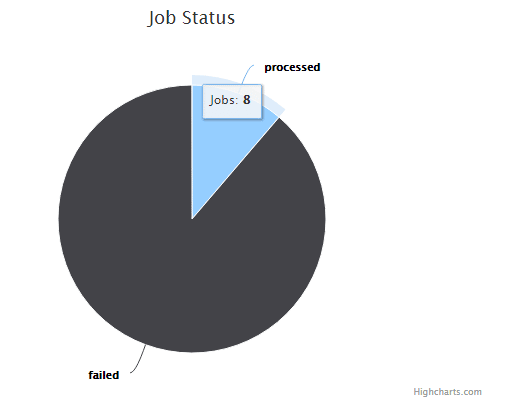

Job status

A Resque job represents a unit of work. Each job lives on a single queue and has an associated payload object. The payload is a hash with two attributes: `class` and `args`. The `class` is the name of the Ruby class which should be used to run the job. The `args` are an array of arguments which should be passed to the Ruby class’s `perform` class-level method.

The above graph shows the status of jobs as processed or failed. A job is added to the failed state if the worker has failed to execute it. Here’s an example of a simple Jobs Object.

var jobs = {

"add": {

plugins: [ 'jobLock', 'retry' ],

pluginOptions: {

jobLock: {},

retry: {

retryLimit: 3,

retryDelay: (1000 * 5),

}

},

perform: function(a,b,callback){

var answer = a + b;

callback(null, answer);

},

},

"subtract": {

perform: function(a,b,callback){

var answer = a - b;

callback(null, answer);

},

},

};

As always, if you build something awesome, do tweet us about it @scalegridio.