High availability clustering keeps your IT systems running without interruptions, even amid failures. This guide details high availability clustering, its key components, and how it functions. You’ll also learn about designing, implementing, and maintaining these clusters to ensure continuous service. High-availability software solutions ensure system availability and protect against revenue loss.

Key Takeaways

- High availability clusters ensure continuous operation of IT applications or systems, even during component failures, which is crucial for the finance, healthcare, and e-commerce sectors.

- Key components of high-availability clusters include cluster nodes, shared storage, and network hardware, each contributing to redundancy and failover capabilities to maintain service continuity.

- Effective high availability clustering involves mechanisms like load balancing, failover systems, and heartbeat monitoring, as well as best practices such as regular testing, vigilant monitoring, and robust backup and recovery plans to minimize downtime.

- High-availability clusters are essential for mission-critical applications such as databases, eCommerce websites, and transaction processing systems. They ensure 100% uptime, zero message loss, robust failover management, and unmatched reliability.

Understanding High Availability Clustering

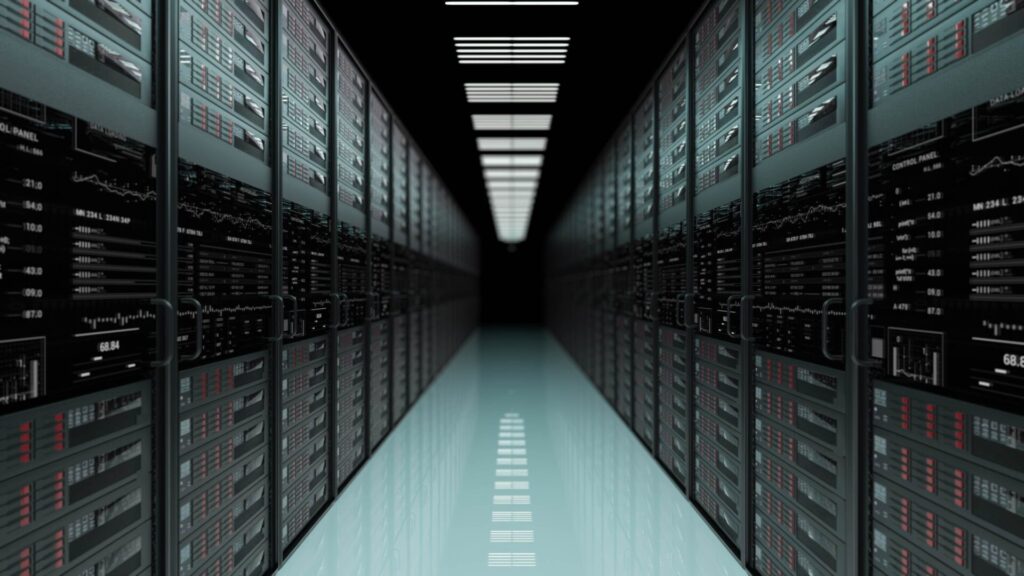

In IT, high availability refers to systems, components, or applications that operate continuously over an extended period without requiring manual intervention. High availability clusters are assemblies of computers designed to provide server applications with sustained operation and minimal downtime, constituting a robust foundation for dependable server infrastructures. They are engineered to maintain the ongoing operations of IT applications or systems despite component failures by offering users a consistent experience without interruptions from potential hardware or software malfunctions.

Metrics such as mean time between failures (MTBF) and mean downtime (MDT) are critical when assessing high availability within systems. These metrics enable organizations to gauge their system’s reliability and resilience. For example, having a long MTBF coupled with a short MDT suggests elevated levels of availability. Striking towards maximum uptime defined in ‘nine,’ ranging from 99% up to 99.999%, is the core focus for high-availability clusters, necessitating comprehensive planning and deployment strategies involving redundancies and failover protocols.

High-availability clusters play a pivotal role, especially in supporting mission-critical functions like:

- Essential database services

- Sharing files across networks

- Core business operations

- Customer-centric activities, including e-commerce platforms and storage area network solutions

- Transaction processing systems

These cluster configurations guarantee uninterrupted service delivery, crucial in fields such as banking, finance, and e-commerce, where round-the-clock functionality is crucial. Likewise, it is indispensable within healthcare settings where life-saving processes demand continual accessibility, as lapses here can critically affect patient treatment efficacy.

The advantages conferred by implementing high availability systems include:

- Utmost operational flexibility

- Simplified maintenance procedures

- Accomplishing full adherence to Service-Level Agreements SLAs offered by Managed Services Providers MSPs)

- Preserving data integrity and security

- Improving reputation among stakeholders due to top quality assurance

Enabling consistent operation even during catastrophic incidents underpins key elements of continuity plans required for contemporary businesses. With virtual environments specifically, it remains integral for minimizing downtimes, thus ensuring steadfast commerce activity flow.

Key Components of High Availability Clusters

Several critical elements, such as cluster nodes, shared storage, and network hardware, form the backbone of high-availability clusters. They maintain service continuity by safeguarding the cluster’s collective well-being and operational integrity. High-availability clusters operate to provide continued service when system components fail by using redundancy and failover mechanisms to ensure minimal downtime and uninterrupted service.

Cluster Nodes

Each cluster node within a high availability (HA) cluster plays an integral part in guaranteeing uninterrupted services, even when malfunctions occur. These nodes collaborate to sustain service continuity and participate in an active-active or load-balanced arrangement. Achieving redundancy is crucial when setting up these cluster nodes so that all possess identical capabilities and settings. This uniformity becomes vital for systems handling high-availability file transfers, where any interruption could result in substantial data loss.

In scenarios where the primary server encounters a failure, secondary redundant nodes—set up as backup databases—are prepared to assume control without losing data access. The moment one node experiences downtime within an HA network, the remaining ones take on their tasks to ensure that operations across the full spectrum of the entire cluster continue unhampered. This built-in redundancy and failover feature enhances high-availability clusters’ reliability and strength characteristics.

Shared Storage

Essential to securing high availability, shared storage supports data replication. By utilizing disk storage units that are accessible to numerous nodes, continuity of data access is guaranteed even in the event of a node failure. This layer of redundancy is critical for uninterrupted data accessibility and prevents any potential loss of information.

Employed within a high availability cluster, solutions such as global file systems and storage area networks enable replicated and redundant storing methods so each node can utilize identical datasets. Not only does this configuration bolster the reliability of data, but it also streamlines both maintenance and oversight processes because every node falls under centralized administration.

Network Hardware

Network hardware is crucial in operating high-availability clusters. These systems use a specialized heartbeat private network connection that consistently checks on the health and status of each node within the cluster. The heartbeat mechanism sends periodic signals among nodes to ensure they are active and responsive, essential for recognizing when a node goes down and triggering appropriate failovers.

To achieve an effective high-availability architecture, it’s imperative that all network hardware pieces, such as routers, switches, and interfaces, are reliable and incorporate redundancy measures. This allows continuous communication throughout the cluster even if issues with certain network elements are encountered. With strong and resilient network hardware, businesses can depend on their high-availability solutions to maintain consistent performance without interruptions.

How High Availability Clustering Works

Several mechanisms, including load balancing, failover protocols, and heartbeat monitoring, are employed in high availability clustering to ensure service continuity and minimize disruptions. These components collectively maintain the cluster’s functionality despite hardware or software malfunctions. High-availability clusters are designed to ensure the continuous operation of the primary system by allowing for immediate failover to alternate servers in case of a failure.

Load Balancing

Load balancing plays a key role in distributing requests among servers, ensuring that no single server faces excessive traffic. This is essential for high availability, as it enhances resource efficiency and avoids overloading any particular server. Common load-balancing techniques utilize algorithms such as round-robin, least connections, and IP hash to manage this process effectively.

Within high-availability clusters, load balancers are responsible for routing client requests to the currently available servers while keeping track of each node’s network activity. The purpose here is to balance out resources so they can sustain heavy volumes of incoming traffic without suffering performance issues or downtimes. By leveraging load balancers and failure detectors within these systems, organizations can spread demands more evenly and quickly identify whenever an active component becomes non-functional.

Failover Mechanisms

When a system component fails, failover seamlessly transfers the workload from that faulty element to a reserve unit to maintain uninterrupted service. This process is supported by technologies like VMware VMotion and XenServer XenMotion, which allow for the migration of virtual machines across cluster hosts without downtime. When an operational resource becomes dysfunctional, systems with failover capabilities immediately default to a backup resource to guarantee continued operation.

Within active/passive clusters, this automated shift typically includes moving the IP address and associated tasks to an idle node waiting on standby. A failover occurs when a node fails in an active/passive model, and traffic is redirected to the standby node. In an active/active model, when one node fails, clients automatically connect to another node, and a load balancer ensures traffic is directed to nodes that can handle it. Such automatic switching between components is pivotal in preserving high availability because it helps ensure swift recovery from setbacks while minimizing service disruptions.

Heartbeat Monitoring

Monitoring via heartbeat entails:

- Regularly dispatching signals among nodes to ascertain their readiness and response capabilities.

- Within a high-availability architecture, these heartbeat communications are sent incessantly to monitor the node’s condition.

- Such monitoring is vital for identifying when nodes malfunction and initiating necessary failovers.

In active/passive clusters, passive elements persistently oversee the well-being of the active component. Organizations employ open-source heartbeat applications that transmit data packets to confirm node operation, thereby maintaining the functionality and responsiveness of their high-availability clusters.

Types of High Availability Clusters

Clusters designed for high availability are Classified into two distinct types: active/passive and active/active. Each possesses specific features and applications that make it appropriate for different situations.

Active/Passive Clusters

In active/passive or master/slave clusters, a principal node manages all the workload while the secondary nodes are on standby. From the onset, just one node actively serves clients in this model, and it does so exclusively until an issue causes cluster failures. Integrating a passive element allows redundancy within such a cluster configuration to be substantially enhanced.

Should any problems arise causing the primary unit to fail, passive units assume responsibility for their tasks to maintain uninterrupted service delivery. This framework is especially beneficial when workloads are unevenly allocated since maintaining reserve nodes can bolster fault tolerance and system dependability. This ensures seamless operation even if a cluster fails, as failover clusters immediately take over to reduce or eliminate downtime.

Active-Active Clusters

Distributed computing systems benefit from an active-active cluster configuration by increasing fault tolerance and minimizing disruptions, as the overall traffic and tasks are evenly distributed across all nodes. Should a node become inoperative within this setup, the remaining functioning nodes take over its workload seamlessly to maintain constant service availability. This approach is particularly advantageous for scenarios that experience large amounts of traffic because it divides the load among several active nodes, thereby boosting performance and dependability.

Implementing an active-active cluster promotes an equilibrium in resource allocation between individual nodes and the network at large, maximizing efficiency and capacity usage. Such clusters employ specific routing methods—like round-robin distribution or weighted assignments—to effectively manage workloads across multiple servers. As a result of these strategies, not only does each node make full use of its capabilities, but it also mitigates risks related to overwhelming any single server with excessive demand in high-traffic conditions inherent to distributed computing environments.

Designing a High-Availability Architecture

In constructing a high-availability architecture, one must focus on the following aspects:

- Developing robust and redundant systems that continue to operate despite malfunctions with minimal downtime

- Removing any single points that might lead to system failure

- Guaranteeing the scalability of data

- Integrating diversity across different geographical locations

Avoiding Single Points of Failure

Ensuring continuous operational capability and high availability aims to eliminate any single point of failure. Strategies such as active replication or establishing a failover system can accomplish this. To pinpoint potential single points where failures might occur, one should scrutinize the architecture diagram of the entire system and evaluate each component.

Redundancy is often introduced by creating multiple copies of essential components within an organization’s infrastructure to sidestep these single points that could disrupt service. Incorporating bulkheads helps section off parts of the entire system to avoid widespread impact from isolated incidents. Reinforcing network robustness with redundant pathways contributes to maintaining unbroken connectivity and bolsters overall systemic durability against interruptions.

Data Scalability

To maintain scalability within high-availability (HA) clusters, horizontal scaling strategies and data replication processes must be deployed. Data sharding is a key method for achieving this by horizontally dividing tables into rows that are then distributed across multiple servers. This technique facilitates the management of vast databases by breaking them down into smaller segments.

Active-active HA clusters designed for scalability can handle increasing traffic volumes and expanding user demographics. When dealing with stateful components in these systems, extra protocols are needed to synchronize states so that consistency is upheld across all copies of the data. By adopting such methods, organizations can ensure their high-availability clusters stay efficient and capable of scaling up to meet demand.

Geographical Diversity

Establishing HA clusters across various geographical locations is essential for improving resiliency and maintaining uninterrupted service delivery. This strategy helps guard against the consequences of local catastrophes, thereby safeguarding services from complete data center outages. Distributing workloads among diverse locales also aids in circumventing single points that could disrupt service.

To bolster disaster recovery capabilities, cloud providers operate multiple data centers in assorted ‘regions’ or ‘availability zones, enhancing their systems’ robustness. Modern service offerings include options to select server placements globally to ensure higher resilience. In efforts related to disaster recovery, it’s typical to see active-passive configurations where the passive component resides in an alternate geographic location separate from its active counterpart.

Implementing High Availability with ScaleGrid

ScaleGrid delivers high-availability solutions tailored to database needs, providing consistent access to vital data and services. Their suite of high-availability software ensures that systems can sustain peak performance through features such as load balancing, automatic failover, and synchronous data replication—key elements for upholding maximum uptime in environments with intense requirements. With the implementation of ScaleGrid’s robust, high-availability architectures, businesses can keep their databases fully functional despite potential hardware or software component failures.

Notably featured within Scale′s array’s breadth of offerings is a capability engineered for responding adeptly to node disruptions by initiating elections that elevate standby servers into master roles during critical times demanding highly available resources. This sophisticated mechanism guarantees remarkably minimal service interruption while ensuring continuous accessibility for users.

The scalability inherent in ′s platform allows enterprises to expand seamlessly alongside increasing volumes of data and escalating demand from end-users without sacrificing dependability or throughput rate—a testament chronicling these technologies’ adaptability standards paralleled with steadfast resiliency traits.

High Availability vs. Disaster Recovery

High availability aims to design systems that prevent failures through redundancy and automatic failover, aiming for continuous uptime measured as a percentage. High-availability solutions are implemented to minimize the impact of common disruptions by guaranteeing consistent access to services for users.

Disaster recovery Focuses on outlining standardized procedures for recovering from catastrophic events, which can be either human-induced or natural occurrences that may lead to data corruption. It evaluates restoration efforts using metrics such as how quickly systems can return online (recovery time objective) and the maximum acceptable amount of lost data (recovery point objective). Disaster recovery plans provide comprehensive strategies detailing pre-emptive measures, immediate responses during calamities, and post-event processes designed for swift and efficient system reinstatement.

Organizations implement remote failover mechanisms to maintain uninterrupted transaction processing operations in case their primary site goes down due to unscheduled interruptions at the main facility. This allows them to seamlessly switch over to an offsite backup system when necessary, keeping critical functions operational without significant delays or losses.

Best Practices for Maintaining High Availability

To sustain high availability, it’s essential to adhere to top-notch practices, which include consistent testing and upkeep, diligent tracking and documentation, and robust backup and recovery strategies. These measures guarantee that high-availability clusters remain operational and handle system failures while ensuring minimal downtime.

Regular Testing and Maintenance

Annual testing of high-availability clusters is crucial to affirm their functionality and identify potential issues. Effective maintenance of these clusters involves:

- Regular updates and patches to maintain system security and functionality

- Scheduling server outages for maintenance

- Ensuring all hosts in a cluster operate on identical software versions and configurations

By following these practices and implementing a cluster resource manager, organizations can minimize downtime and ensure the smooth operation of their high-availability clusters.

Testing patches or updates before deployment is crucial to avoid introducing new vulnerabilities or issues. Regular maintenance also involves checking the health of backup systems and ensuring that failover mechanisms are functioning as intended. By adhering to these practices, organizations can maintain the reliability and robustness of their high-availability clusters.

Monitoring and Reporting

Ensuring the continuous monitoring of a cluster’s health is essential for timely detection and resolution of any emerging problems. IT administrators typically utilize an open-source program that sends heartbeat signals in the form of data packets to each machine within the cluster. This facilitates instant tracking of each node’s availability and response rate. This involves assessing network interface statuses as well as power supply conditions.

Having efficient tools for both monitoring and reporting plays a significant role in preemptively identifying issues before they escalate into major complications. With alert systems and notifications set up, administrators can react swiftly to irregularities, helping maintain the system’s high availability without interruptions. Consistent reports aid in pinpointing patterns or specific aspects that might need attention or enhancement. This proactive approach significantly aids in sustaining both robustness and reliability within the high-availability system.

Backup and Recovery Plans

In environments prioritizing high availability, backup and recovery strategies are paramount to ensure ongoing operations and safeguard against data loss. The Recovery Time Objective (RTO) establishes a system’s maximum offline duration without causing significant disruption. At the same time, the Recovery Point Objective (RPO) indicates the utmost amount of data that may be lost in terms of time before it adversely affects business processes. Setting precise RTO and RPO benchmarks equips organizations with a framework for devising effective backup and disaster recovery plans.

For all production databases operating in such high-stakes settings, regular Full Backup jobs are essential to curtail potential data compromises. Differential Backup may complement Full Backups effectively to achieve desired RTO levels, especially with expansive databases. Adopting replication techniques like SQL Server Replication or methodologies, including log shipping and database mirroring, empowers different approaches to sustaining data integrity and promoting rapid restoration capabilities post-incident.

Real-World Applications of High Availability Clustering

High availability (HA) clusters are essential in maintaining continuous service with as little downtime as possible across multiple sectors. These clusters ensure uninterrupted access to vital financial services and data in the Banking, Financial Services, and Insurance (BFSI) industry. HA clustering also serves a critical function within healthcare by keeping patient records and medical applications always accessible, which aids healthcare providers in offering timely and efficient treatment without interruption.

For e-commerce operations, high availability clustering is pivotal for smoothly processing substantial transaction volumes to enhance customer shopping experiences. It’s similarly crucial for government bodies that depend on HA clusters to provide consistent public service application performance. Educational institutions leverage high-availability clustering technology to provide continuous accessibility to online learning materials, while telecommunications organizations employ it to deliver unfailing communication services.

Such high-availability infrastructure plays a key role in various industries. Indeed, High Availability (HA) Clustering is paramount in delivering unbroken services with reduced operational interruptions across diverse business realms.

High availability clustering forms the bedrock of contemporary IT frameworks, guaranteeing that services stay up and running despite setbacks in hardware or software. By grasping essential elements, techniques, and recommended approaches for implementation, enterprises can craft strong, high-availability architectures that reduce downtime and safeguard continuous operation.

Spanning sectors like finance, healthcare, e-commerce, and telecoms. High availability clustering is pivotal in delivering continuous service access while boosting system resilience. Enterprises adhering to best practices in upkeep procedures can maintain their monitoring systems with effective recovery strategies—ensuring dependability within their high-availability infrastructures—and elevate the standard of reliability and performance across their IT landscapes.

Frequently Asked Questions

What is high availability clustering?

High availability clustering consists of a collective operation where multiple computers collaborate to guarantee constant service, maintaining uninterrupted functionality despite the failure of any single component.

How does load balancing work in high availability clusters?

By evenly dispersing workloads across multiple servers, load balancing prevents any resource from being overwhelmed and ensures efficient functioning. This contributes to sustaining high availability within server clusters.

What is the difference between active/passive and active/active clusters?

In active/passive configurations, the primary node manages request processing while the secondary nodes remain in ready mode for backup. Conversely, an active/active cluster arrangement distributes traffic and workload evenly among all nodes, leading to enhanced resource use and improved system availability.

Why is shared storage important in high availability clusters?

In high-availability clusters, shared storage plays a critical role by allowing data to be replicated and redundant. This ensures that even when one node experiences failure, there is no disruption in access to vital data, maintaining constant high availability.

How does ScaleGrid support high availability for databases?

ScaleGrid ensures uninterrupted access to essential data and services by providing high availability for databases. This is achieved with functionalities that include real-time data replication, load balancing, and automatic failover capabilities.